Jordan Ellenberg calls geometry “the cilantro of math,” which is to say that few people feel neutral about it. His own feelings about the subject are decidedly positive, and he evangelizes his positivity throughout the pages of Shape: The Hidden Geometry of Information, Biology, Strategy, Democracy, and Everything Else.

Shape is a far-reaching book, peppered with footnotes, Talking Heads references, and a surprising amount of poetry. In the introduction, he references “the most famous geometry poem of all,” Edna St. Vincent Millay’s Euclid alone has looked on Beauty bare, mostly to establish that the book will soon leave Euclid behind. By the end of the book, it does feel as though Jordan has made good on his promise of telling the story of the hidden geometry of everything. Jordan recently joined SOW’s reading group to talk about the geometry of machine learning and gerrymandering, his literary influences, and how large language models affect his work. What follows is an edited and condensed transcript of our discussion.

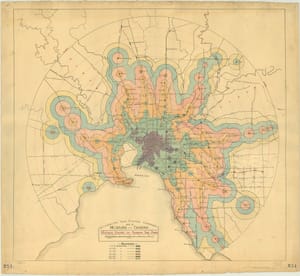

Hillary Predko: Your book exploded my idea of geometry, which is still firmly planted on the Euclidean plane – the examples all stretched the concept of what I would've considered geometric. Take distance: you explain how we could think of measuring distance on a map in a straight line, but we could also measure it in the time it takes to travel between two points, or we could count the number of city blocks between two points. Each of these changes in measurement infers new geometries. Could you touch on how you conceive of and define different geometries?

Jordan Ellenberg: First of all, I would say that it's not just how I conceive of it! In modern mathematics, geometry does have this more general meaning that applies to any notion of distance. I don't get into it much in this book, but in informational geometry people even talk about the distance between two strings of bits, measuring where strings of ones and zeros differ (this is called the Hamming distance). Now, it's true that if you're in the airport and you pick up a book and it says it's about geometry, you expect to see some circles and triangles, not strings of binary. But I feel like as an author, I always want to give people stuff they didn't expect to find from the back cover.

I know it seems strange at first to call some of this stuff geometry – like games, elections, or pandemics. But intuitively, people understand how these things are about distance. For example, we talk about close relatives versus distant relatives. If I said, "Oh, by a close relative, do you mean a guy who's standing next to you? And by a distant relative, do you mean somebody who lives in a different city?" You'd say, "Obviously, I mean something else." So, is that distance an analogy or a metaphor? Part of the lesson of the book is that it's not just a metaphor; there is an actual notion of geometry to our family trees. Your second cousins really are distant from you, with a notion of distance that can be defined and makes just as much sense as the geographic notion.

HP: This expands into some topics that I thought were quite surprising. You write about the geometry of neural networks, large language models, and other AI systems. You called generative AI “the geometry of letter strings.” How can understanding this geometry help us understand these systems? And does that help us develop a critical understanding of their outputs?

JE: I would call it more demystification than understanding, because nobody actually understands these systems. What I'm trying to do in the book is outline the fundamental trial and error process that underlies how these things are built. It demystifies the feeling that they’re magic. Since I wrote Shape, I've gotten more involved with those systems. I have a new paper coming out in a couple of weeks that is in this vein, and I have been working with some of the folks with access to these very powerful models. I am 100% on board with these being valuable tools, and I feel like even having the barest acquaintance with how they're built helps me not feel like somebody just summoned a demon. The guts are not that different from other optimization things that you do all the time. If you have done a linear regression, there are similarities. These systems are not linear regression, but it’s like they’re the descendant three steps down.

And you might say, “But how can [AI systems] do such amazing things?” Well, linear regression also does amazing things! When that was invented in the 19th century, it opened up entire new conceptual realms of understanding relationships between things. And it did feel magical that you could make these amazing predictions using this mathematic tool of linear regression that didn't exist before. So yeah, new tools are great, but they're also just math.

HP: I enjoyed the short chapter about the nature of problem-solving and work in an AI-saturated world. At one point you say that machine learning algorithms have “processing power but not taste.” How do you think about your work, as a mathematician, an educator, and a writer, in this rapidly changing technological landscape?

Read the full story

The rest of this post is for paid members only. Sign up now to read the full post — and all of Scope of Work’s other paid posts.

Sign up now